Vision Is Key for Robot Autonomy Another key to robot autonomy is the ability for robots to visually navigate and interact with their surroundings. A good example of this is AEye’s Intelligent Detection and Ranging technology, which enables vehicles to see, classify, and respond to objects in real time.

Improvements in vision have led to a wide range of robotbased applications, such as security (thanks to improved facial recognition capabilities), public safety (through the detection of potentially harmful objects in public spaces), and manufacturing (in quality control systems).

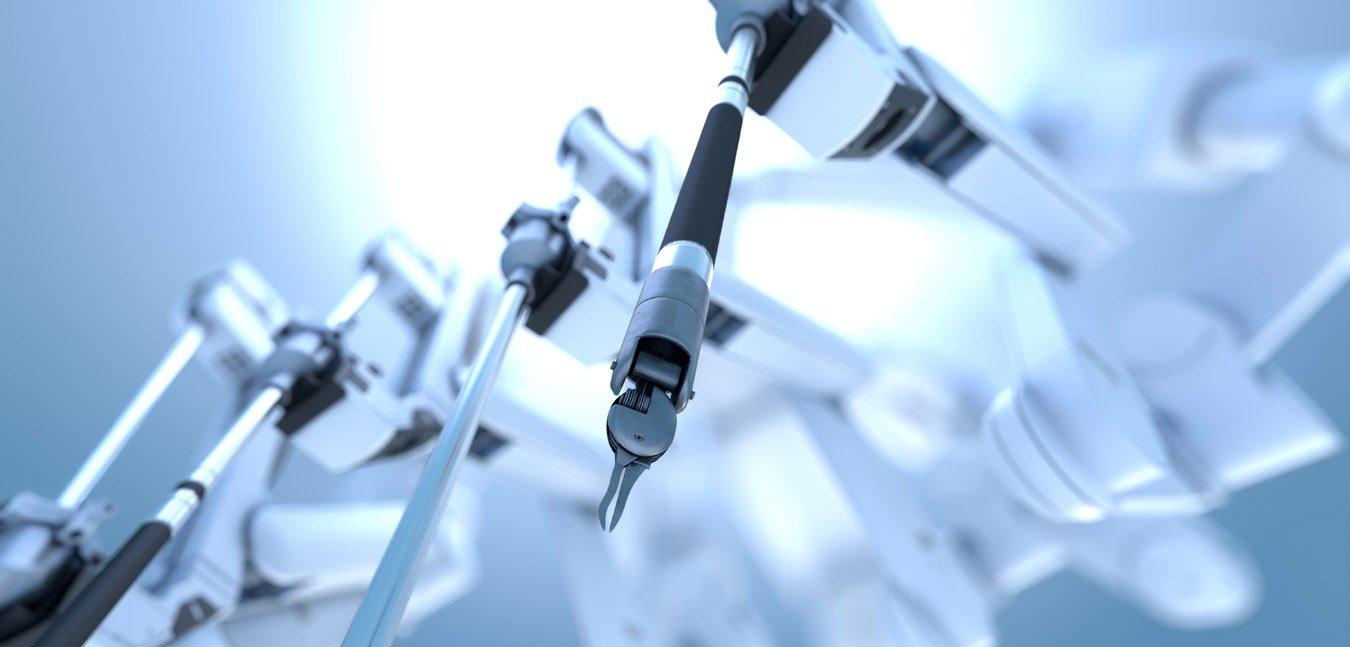

Computer vision and other forms of robotics are also having a revolutionary impact on healthcare. In one instance, scientists were able to train a neural network to read CT scan images and identify neurological disorders faster than humans can. In another, they trained an AI to more quickly diagnose certain cancers.

Beyond these powerful diagnostic use cases, we see robotics playing an even more direct role in patient care. These applications range from minimally invasive robotic surgery to remote presence robots that allow doctors to interact with patients without geographical constraint, to interactive robots that can help relieve patient stress.

This impact will be felt in terms of the level of autonomy consumers expect in their products as well as the level of autonomy we feel comfortable as a society building into our products. For example…

How many of us feel comfortable hearing the phrases “autonomous weapons system” or “autonomous police unit”?

As it evolves, autonomous technology will not only influence the types of products we produce, products which we will increasingly need to design either to interact with or enhance autonomous systems, but it will influence our architecture, our workplaces, and the urban environment itself.